The other day, I planned to take my 15-year-old son to the movie theatre to see “Hateful Eight” in 70mm film format. The theatre would not allow him in. Under article 240a of the Dutch penal code, it is a felony to show a movie to a minor when that movie is rated 16 or above. Even though I think I am responsible for what my son gets to see, I understand that the rating agency put a 16-year stamp on this politically-incorrect-gun-slinging-gore-and-curse-intense-comedy feature. All this is to say that in the (liberal and democratic) Dutch society, blocking and filtering communication is a fact of life even in contexts outside the Internet.

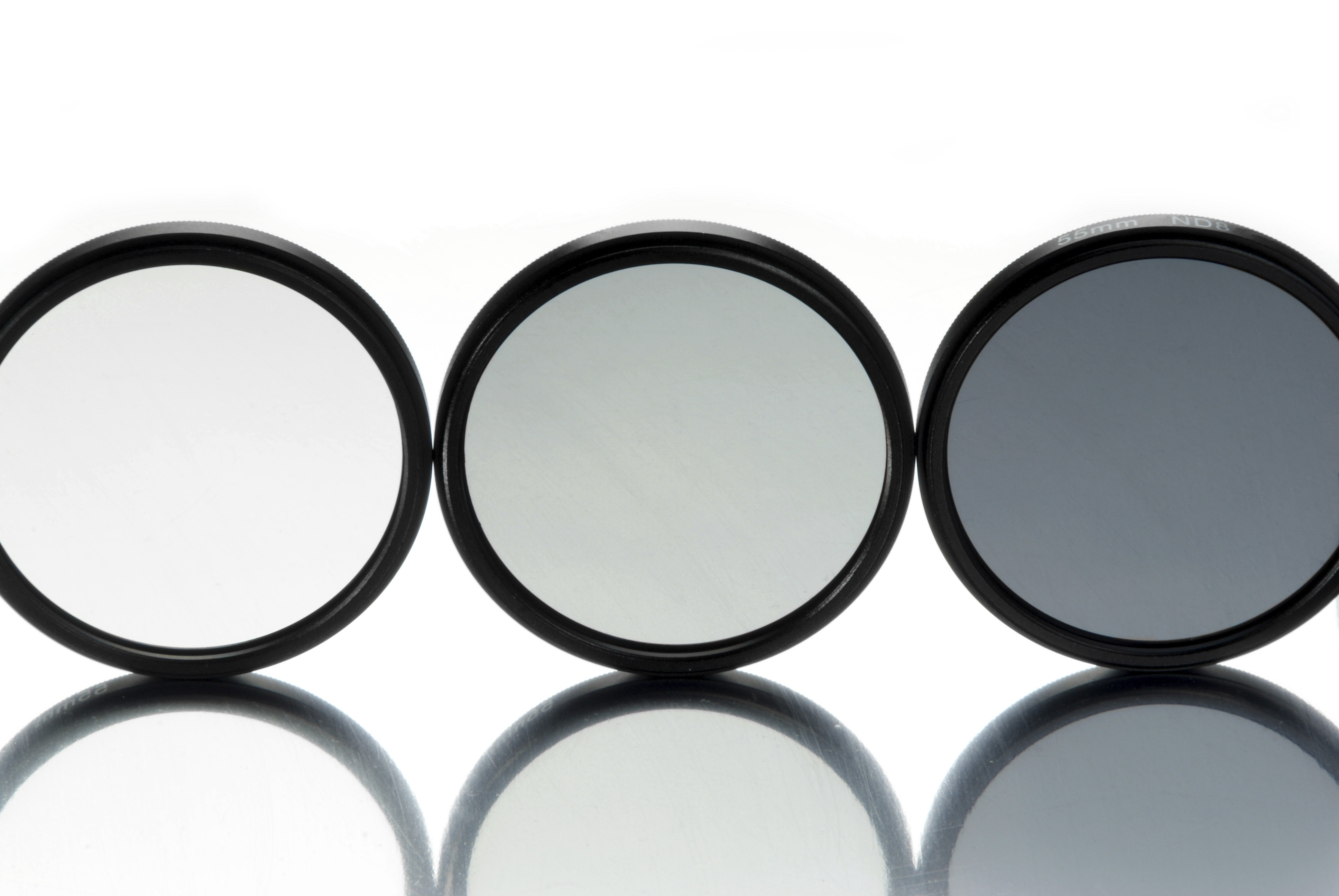

On the Internet, there are many reasons why blocking and filtering of communication takes place. Some of us use ad-blockers because we get tired of kitchen utilities ads on every single page we visit, simply because we’ve been surfing online recipes. Parents may want to block adult content for minors. Companies may want to ensure their confidential trade secrets don’t leak. All of us want to keep viruses and malware off our computers and networks. Other reasons to block communication include copyright protection, preventing illegal trade (pills, handbags, gambling), public safety, health, and/or moral concerns, the latter triad often being a motivation for the type of blocking and filtering we call censorship.

It may be useful to separate the Internet infrastructure and its content: Many times the motivation for blocking and filtering is based on content. A notable exception is the case of captive portals where the purpose of blocking is to get users to pay for accessing the network as a whole. Herein I am reflecting on blocking and filtering as tools to implement a policy about the content carried on the network. When defining this kind of policy and when translating policy into a technical implementation our Collaborative Security framework, while perhaps not fully applicable, provides input for an approach.

The five key elements from the Collaborative Security approach suggest that during the policy-technology translation, one should:

Foster Confidence and Protect Opportunities. This results in a requirement to be transparent about the policies followed and to make sure that the result of the implementation does not negatively impact the opportunities of those not directly involved. For example, recently the Internet Engineering Task Force specified the use of a specific error code that allows blocking entities to provide information about the reason for blocking specific information, the transparency gained with these Error 451 messages is expected to help foster confidence.

Take into account Collective Responsibility towards the Internet as a whole. Related to protecting opportunities, the blocking party should be aware that there is a responsibility towards the system. Some techniques may adversely impact the way the Internet is collectively managed. Sometimes the impact may be secondary. For instance, users will try to work around the blockage and their methodology may cause damage.

Honor Fundamental Properties and Values. The most obvious set of fundamental values being Human Rights, but also the Internet Invariants such as integrity and global reach, features of the technical architecture that, if impacted significantly and long term, would adversely shape the course of its future.

Take into account Evolution and Consensus. The way to express the policy requirements and detailed methods to implement them are both evolving. The technology-neutral expression of the policy requirement needs to involve a broad set of stakeholders and should include technological specialists in order to assure there are no side effects negatively impacting other key aspects mentioned here.

Think Globally, act Locally. Local blocking and filtering can have global effect (e.g. by local changes to the routing system impacting traffic flows around the globe). On the other hand it may well be that blocking close to the edge, as local as one can get, minimizes global impact.

A recent Internet Architecture Board publication, RFC 7754 – Technical Considerations for Internet Service Blocking and Filtering, provides advice to inform those that translate policy requirements into implementations and helps to address some key aspects mentioned above.

The document looks at different design patterns that can be applied in the policy-technology translation and assesses features like scope, granularity, efficacy, and security of various approaches. The discussion of scope tries to answer the question of whether blocking and filtering can be localized enough to target a given jurisdiction or policy realm and does not have more than local impact. Granularity assesses whether innocent bystanders are likely to be effected. Efficacy addresses the effectiveness of the measure and informs a risk discussion around the implementation. Finally, security assesses the impact on the security implications.

Understanding these technical features is important when it needs to be decided (often by a judge) if the medicine is worse than the disease. If the implementation has more than local scope and impacts more than just the targeted form of communication and is not going to be effective then the societal costs may be too high.

As an example of this type of analysis: In 2011 my colleagues wrote about a workshop on DNS Blocking. Let’s annotate the findings from that workshop:

- DNS blocking/filtering does not solve the problem, as blocking access to a website does not mean that the content simply disappears from the online space; on the contrary, it is only not accessible at a certain location, but it can be easily moved to a different one. The measure can, therefore, be avoided at all times. [This is an Efficacy argument]

- It has implications for privacy and security; [A Security argument]

- It is incompatible with DNSSEC and undistinguishable from DNS attacks; [A Security argument]

- It encourages Internet fragmentation/balkanization, affecting the universal resolvability of the Internet. Instead of having one global Internet, we move towards having a fragmented, country-by-country Internet; [A Scope and a Collaborative Security argument]

- It may prevent people from accessing legal content, thus affecting people’s right to information. When a website is blocked because it hosts illegal content, access to the legal content hosted on the respective website is also blocked; [A Granularity argument]

- It makes it even more difficult to attack the source of the problem, as it may function as an early warning system for criminals. [A Security argument]

The RFC takes a broader approach than looking at specific technology but looks at the properties of rendezvous systems (of which the DNS is an example), the Network (routing system, network flows, etc.), and the end-points.

It concludes that there are no perfect or even best ways to perform blocking and filtering, and there only seem less bad ways that are probably hybrid approaches implemented at the end-point and rely on information from the network. In addition, it makes one interesting other observation:

“where filtering is occurring to address content that is generally agreed to be inappropriate or illegal, strong cooperation among service providers and governments may provide additional means to identify both the victims and the perpetrators through non-filtering mechanisms, such as partnerships with the finance industry to identify and limit illegal transactions.”

In other words, technology may not always be the appropriate tool to fulfil a policy requirement. So not only: Think Globally, act locally, but also think creatively and act collaboratively.

Disclaimer: I am co-author of RFC 7754. This blog post is a personal reflection and does not necessarily reflect the Internet Society’s opinion, the opinion of my RFC7754 co-authors, or that of the IAB.